Prototyping with AI: An exploration of AI design tools

Given the massive hype around AI and my own general curiosity as to whether I could start relying more on technology to do some of the more laborious aspects of my job for me, I decided that I would take some time explore if there are any AI design tools that can automate prototyping, a significant part of my role as a product designer.

At MakerX, we recently had a company-wide session that entailed brainstorming venture ideas and evaluating them on the basis of business viability (will this be sustainable or profitable?), technical feasibility (can we build this?), desirability (do people want this?), and strategic fit (does it leverage our core assets?).

The idea I contributed (excuse the not great name) was:

Figmai: a Figma plug-in that helps companies craft compelling and delightful user experiences by utilising AI to create prototypes, drawing upon libraries of common design patterns from leading companies and open-source design systems.

I think that if such a product existed and it was able to actually deliver good results, this would automate a non-trivial amount of my work.

I figured surely something like this must already exist or be in development, and so I had a look around. While it was difficult to find anything that could stitch together a fully polished high-fidelity prototype, I was able to find a few products that at the very least could manage the design of a single interface.

*Note that in my research I focused specifically on platforms that had text to design functionality, as I think the core benefit in applying AI to this domain is not having to do the thinking yourself as to what a screen should look like. AI should be able to understand best practice and common patterns to basically do that work for you. There are currently products that can transform a sketch into a design that essentially just matches it, but I didn’t focus on these.

Here’s what I found:

Existing

- Takes simple text prompts and transforms them into mobile or desktop UI.

- Allows you to request edits and give feedback to iterate on a screen it devised.

- Allows you to adjust visual style by picking light or dark mode, a primary colour, typography, and border radius.

- Allows you to export the design to Figma.

- This was the only Figma plug-in I was able to find (for text to design) that seemed to work and was also directly related to prototyping rather than finer details like AI-generated images, copy, etc.

- I played around with it a bit - the results definitely weren’t amazing, but I can appreciate that it’s a start. I think the direct integration with Figma was a really good idea. Given the relative immaturity of AI design tools at the moment, there is a real need to still use Figma in order to edit and modify, so a plugin directly in Figma is a great way to entice designers to make use of tools like this while the development of such tools continues to evolve to a greater level of maturity.

Upcoming

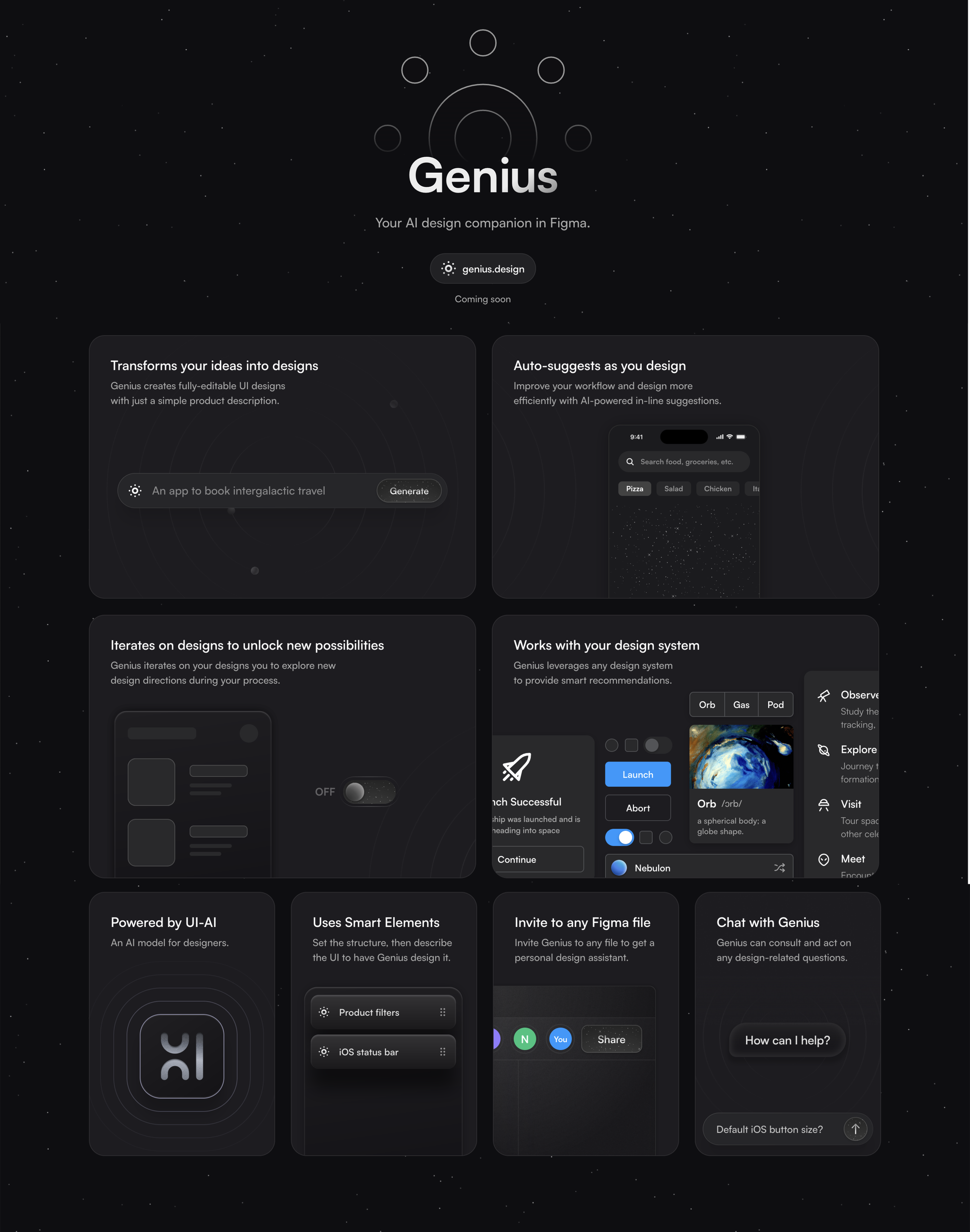

Genius by Diagram, acquired by Figma:

- Claims to have the ability to transform simple text prompts into fully-editable designs with the ability to iterate and work with your design system.

- I’m most excited and optimistic about this one. I can’t wait to try it when it comes out.

- Claims to soon be releasing a Text to Design feature and its marketing video shows it can produce an app (series of screens). Not much other info available at this stage.

Galileo

Since Galileo seems to be the most promising of tools that are currently available now (I’m sure Genius/Figma will likely surpass them once they release), I’ll focus on sharing more of what I learned from playing around with it.

I decided that for the purpose of evaluating, I’d trial a range of different prompts - some simple/straightforward descriptions of a screen, and others a bit more vague and complex, requiring some interpretation and problem-solving.

I’ll share what transpired after I gave this specific prompt: design the home/dashboard page of a mobile banking app that allows users to hold and exchange global currencies.

There are a number of things that are off / don’t make sense, as exemplified by its responses (or lack thereof) to my above requests:

- It wasn’t able to deduce that the most important information for someone “holding and exchanging global currencies” would be their actual balance in those currencies. When I shared this information and asked it to make the balances more prominent, it did not do so and left them the same.

- It seems to have some grasp of some design principles, but not others. For instance, it kind of demonstrated an understanding of hierarchy, but then did not really allocate the items sensibly to the hierarchy it created. It put total balance, for instance, at the same level of hierarchy as any single currency balance.

- The exchange action, besides the pay and deposit actions (which it didn’t even include), is one of the most important actions, yet it doesn’t appear prominently or very action-like. It’s easy to miss compared to other products in this space like Wise.

- It was disappointing that it couldn’t complete my request to use similar components and styles to AirBnb. And when I took up its offer to instead “design an interface inspired by common design principles seen in popular apps,” it actually made zero changes besides switching it to light mode. In terms of visual design, I would like to be able to give both very specific prompts (e.g. “like a mix between the styles of Spotify and Uber”) and very broad prompts (e.g. “a cosmic, space-like, and playful design”), and have it come back with aesthetically pleasing outcomes that are also accurate with respect to the brief. I would like to be able to reference open-source design systems or well-known product companies and have it be able to devise its own variant of the mentioned styles that is still unique enough not to breach any copyright restrictions.

From my brief and experimental interactions with Galileo, another thing I hope to see improved in future iterations would be the ability to give looser prompts that are objective/goal/problem-based rather than screen-based, like “design an app that encourages teenage children to drink a healthy amount of water each day.” (FYI it can do this, albeit not amazingly.)

These issues aside, Galileo did an okay job.

Would I ever use this design? No.

Do I think it would pass the standards of what anyone would expect from a product designer with my level of skill and experience? No.

Am I ready to go ahead and outsource my job? Unfortunately not.

Is it a maybe-useful reference for a product designer doing some initial thinking around a concept or brief? Sure.

Is it a not-so-bad starting point for a non-designer exploring an idea? Sure.

I think for someone already skilled in product design and adept at using Figma and leveraging Figma Community resources where appropriate, it’s probably quicker to just whip up something on your own and know that you’ll be able to trust the outcome vs. investing lots of time trying to correct and tweak Galileo’s output to something you’d be proud of sharing.

That said, it’s definitely fun to play around with tools like this and imagine what the future is going to be like.

Future speculation

While it’s clear that there are companies working on the automation of prototyping (including Figma itself), I don’t personally feel that the tools that are out currently are advanced enough yet to be able to rely on them in any meaningful way. I will however be eagerly following along as they continue to be developed, as I do believe there will come a day in the not-too-far future where the results are essentially as good as what can be produced by a designer with 20+ years of experience at top product companies.

Upon sharing this with Stafford, he insightfully added:

If the product does improve to the point that the output is much higher, so too will the output from the 20+ year designer because they can use the tool. So can the product ever really reach the output of the best designers in the industry if it's just a tool designers in the industry use? The tool won't get any better if it doesn't improve, and the best designers don't get better if they don't improve, but the best tools and the best designers always improve, and the tools are probably already producing what the best designers were producing before they improved.

I honestly think the end-state for a system that is really successful at automating prototyping would essentially be making Figma (as it is now) redundant because the product itself would be capable of building and modifying its own design system and updating styles throughout, iterating on a flow, changing the information architecture, devising a new feature and responsively redesigning any parts of the app as needed, stitching together seamless interactions, etc.

The more likely scenario, and that which is already underway anyway, is that Figma itself will pioneer this next generation of AI-assisted design, remain a market leader, and transform all aspects of its current product to be leveraging AI to its fullest capabilities.

I really like this quote from Noah Levin, Figma’s VP of Product Design:

The ceiling is how good a designer can be at designing, which is constrained by the available tooling; the floor is the minimum skill required for someone to participate in design. AI will lift this ceiling, leading to more creative outputs made possible by more powerful tools; it will also lower the floor, making it easier for anyone to design and visually collaborate.

And then what?

I think that the uptake of AI to automate prototyping brings about a number of questions around what the future of the role of a product designer might look like…

Will low-fidelity prototyping die given that time is no longer a tradeoff in producing high-fidelity work? Or will AI tools enable you to specify fidelity, as it will still remain important to discuss early concepts in a bare-bones flow that is visually agnostic?

As prompts become less instructional and more broad and creative, what biases might be inherent in solutions AI-generated prototypes suggest?

As technical and tooling-based design skills (besides knowing how to interact with design AI) become less important for future designers to have, will a core skill become the ability to exercise good judgement in determination of what should change in an AI-prototyped output and what manual effort or prompts could return that better result? And when “good judgement” becomes automatable too, then what?

I tend to agree with Wade’s sentiments that in tech professions, at least in the initial phases of AI, we will “overwhelmingly see job supplementation rather than a replacement,” although tbh I’m personally hoping my job as well as everyone else’s gets automated (in some carbon-negative way that someone figures out) and we can all sit back and enjoy some FALC.